- x AI Reverie | Future Blueprint

- Posts

- OnlyFake's $15 Fake ID

OnlyFake's $15 Fake ID

AI's dark Side

Welcome to this tuesday edition of

The AI Reverie

The only newsletter you need to stay on top of the AI news, tools and tactics.

The Gentleman’s Agreement:

We don’t need donations or gifts of any kind, all we ask from you, dear reader, is that you open each email and click at least one link in it.

Thank you,

Now let’s dive in;

Wednesday 7h of February

Today we’ll cover

Together with Vanta

Stanford's AI Revolutionizes Medical Imaging with Superior Chest X-ray Interpretation

Google Lookout: AI Unlocks Visual World for the Blind with Image Q&A

OpenAI's C2PA: The Digital Truth Seal for AI-Generated Imagery

Deep-Dive:

AI's Dark Side: OnlyFake's $15 Neural Network IDs Threaten Cybersecurity

TOGETHER WITH Vanta

Your SOC 2 Compliance Checklist from Vanta

Are you building a business? Achieving SOC 2 compliance can help you win bigger deals, enter new markets and deepen trust with your customers — but it can also cost you real time and money.

Vanta automates up to 90% of the work for SOC 2 (along with other in-demand frameworks), getting you audit-ready in weeks instead of months. Save up to 400 hours and 85% of associated costs.

Download the free checklist to learn more about the SOC 2 compliance process and the road ahead.

QUICK REVERIES

AI News

Stanford's AI Revolutionizes Medical Imaging with Superior Chest X-ray Interpretation

Stanford's CheXagent and CheXinstruct represent a significant leap in automated Chest X-ray interpretation, promising to revolutionize clinical decision-making and patient care. These tools, which blend clinical language models with vision encoders, deliver highly accurate CXR image analyses, surpassing current models.

Google Lookout: AI Unlocks Visual World for the Blind with Image Q&A

Google's Lookout app now empowers the visually impaired to understand their surroundings through photos. By asking questions about uploaded images, users receive detailed descriptions from AI, enhancing their perception of the world.

OpenAI's C2PA: The Digital Truth Seal for AI-Generated Imagery

OpenAI has integrated the C2PA standard into DALL·E 3, embedding metadata to verify the origin and authenticity of images, a move towards greater digital transparency. While this metadata can increase file sizes slightly, it's a small price for the assurance it provides, though it's not foolproof as it can be removed by various means.

AI DEEP FAKES

AI's Dark Side: OnlyFake's $15 Neural Network IDs Threaten Cybersecurity

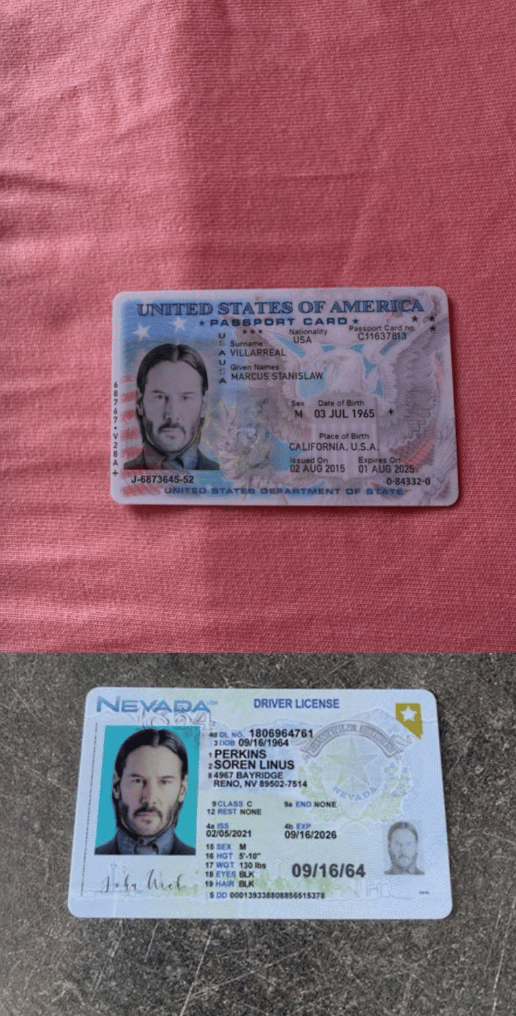

A SCREENSHOT OF THE ONLYFAKE GENERATION PANEL. IMAGE: 404 MEDIA.

In a world where technology is advancing at an unprecedented pace, we're seeing the emergence of a new kind of threat: AI-generated fake IDs.

An underground website called OnlyFake is leveraging "neural networks" to produce realistic-looking fake IDs for a mere $15 according to 404 Media, disrupting the cybersecurity landscape and potentially facilitating activities like bank fraud and money laundering.

OnlyFake's technology allows users to generate fake IDs almost instantly, complete with any name, biographical information, address, expiration date, and signature they desire. The IDs are so convincing that they can potentially bypass various online verification systems.

The site's owner, who goes by the pseudonym John Wick, claims that hundreds of documents can be generated at once using data from an Excel table. The process involves entering the desired information, uploading a passport photo, and cycling through automatically generated images that transform the entered name into a signature.

Image:404 Media |  Image:404 Media |

The site's offerings aren't limited to just IDs. It also provides other technical measures to make its images more convincing, such as a metadata changer that fabricates details like the device that allegedly took the photo, the date and time of creation, and spoofed GPS coordinates.

However, the site's capabilities don't stop there. OnlyFake's community members are also working on solutions to bypass video verification systems, which require users to physically hold up their ID to the camera. They're exploring the use of animated stills of people holding up IDs as a potential approach.

In case you missed it: Hong Kong Finance Worker Falls Victim to $25M Deepfake Heist: A Stark Reminder of Digital Deception's Evolving Threat

This development raises serious concerns about the potential misuse of AI technology for fraudulent activities. As Senator Ron Wyden points out, AI-based tools that generate fake IDs and video deepfakes pose a real fraud problem for government agencies, financial institutions, and other companies. The United States, he says, desperately needs secure, authenticated IDs to enable Americans to verify their identity when conducting sensitive or high-risk transactions.

This story serves as a stark reminder of the double-edged sword that is technology. While it can bring about incredible advancements and conveniences, it can also be used for nefarious purposes if it falls into the wrong hands. It's a call to action for us to stay vigilant and proactive in our efforts to ensure the security and integrity of our digital identities[1].