- x AI Reverie | Future Blueprint

- Posts

- 👀! OpenAI Disbands AI Safety Team

👀! OpenAI Disbands AI Safety Team

And more people leave

Welcome to this Saturday edition of

The AI Reverie

More drama in OpenAI leadership, as Ilya Sutskever and Jan Leike resigns, leading to the dispansion of the AI Safety team.

Let’s hope OpenAI have their ducks in a row, and still focuses on the safe development of AI.

The Gentleman’s Agreement

We don’t need donations or gifts of any kind, all we ask from you, dear reader, is that you open each email and click at least one link in it.

Thank you,

Now let’s dive in;

Saturday 18th of May

Today we’ll cover

TOGETHER WITH Flot AI

👀 OpenAI Disbands Superalignment Team Amid Leadership Shake-Up

TOGETHER WITH FLOT AI

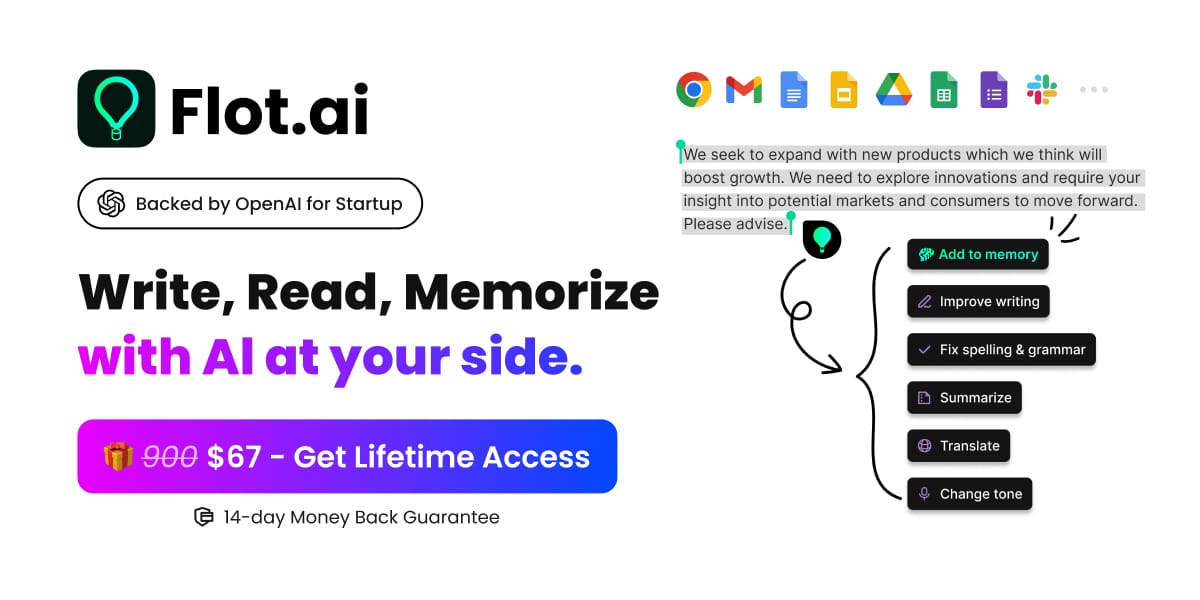

Your Everywhere and All-in-One AI Assistant

Imagine an AI companion that works across any website or app, helping you write better, read faster, and remember information. No more copying and pasting—everything is just one click away. Meet Flot AI!(Available on Windows and macOS)

Seamless integration with any website and app

Comprehensive writing and reading support:

Improve writing

Rewrite content

Grammar check

Translate

Summarize

Adjust tone

Customized Prompts

Save, search, and recall information with one click

Subscribe to Oncely LTD & Flot AI Newsletters by clicking.

AI SAFETY

👀 OpenAI Disbands Superalignment Team Amid Leadership Shake-Up

Key leaders resign as OpenAI shifts focus, raising concerns about AI safety priorities.

Open AI CEO Sam Altman to the left, Ilya Sutskever to the right

The Reverie

OpenAI has disbanded its Superalignment team, which was dedicated to ensuring that advanced AI systems align with human goals.

This decision follows the high-profile resignations of key leaders Ilya Sutskever and Jan Leike, sparking concerns about the company's commitment to AI safety.

The Details

Leadership Departures: Ilya Sutskever, co-founder and chief scientist, and Jan Leike, co-lead of the Superalignment team, have resigned, citing disagreements with OpenAI's leadership.

Team Disbanded: The Superalignment team, formed to address the risks of superintelligent AI, has been dissolved, with its responsibilities integrated into broader research efforts.

Safety Concerns: Leike criticized OpenAI for prioritizing product development over safety, highlighting internal conflicts about the company's core priorities.

Why should you care?

The disbanding of OpenAI's Superalignment team and the departure of its leaders underscore the ongoing challenges in balancing AI innovation with safety.

As AI technology rapidly advances, ensuring that these systems are developed responsibly remains crucial.

This development raises important questions about how AI companies prioritize safety and the potential risks of sidelining these efforts in favor of rapid product development. Source

Recommended reading

If we had to recommend other newsletters

Agent. AI

Written by Dharmesh Shah. Dharmesh Shah is co-founder and CTO of HubSpot, and writes in-depth, technical (data-science background) insights in how AI works. This is a great supplement to The AI Reverie:

AI Minds Newsletter

“Navigating the Intersection of Human Minds and AI”. This newsletters dives deeper into usecases, and features research papers and tools that help you become smarter about AI. Highly recommended reading.

✨TOGETHER WITH AI REVERIE SURVEY

To help us create the best experience for you please answer a few quick questions in this survey below:

FEEDBACK

What did you think about today's email?Your feedback helps me create better emails for you! |

We're always on the lookout for ways to spice up our newsletter and make it something you're excited to open.

Got any cool ideas or thoughts on how we can do better? Just hit 'reply' and let us in on your thoughts.